The capabilities of new AI tools are having a democratizing effect on the use of AI, ushering in a new wave of transformation that is spurring rapid adoption in every industry, including insurance. As insurers begin to bring new capabilities like generative summarization (among others) to their operations, there is a growing imperative to make sure they are deploying any AI functionalities responsibly.

Responsible AI is on the agenda of many insurance industry events. At the Association of Insurance Compliance Professionals (AICP) annual conference in Austin, we took part in a session about the compliance considerations for insurers and the responsible way to adopt AI technologies. At InsureTech Connect (ITC) in Las Vegas, arguably one of the largest industry events of the year, we joined a large expert panel around the theme of “Harmonizing Sustainability” to talk about the importance of applying a responsible approach for insurance AI.

Amidst the new capabilities unleashed by generative and large language models (LLMs), the rapid pace of evolution and the resurgence of regulation, is responsible AI in insurance possible? And what does it look like?

AI’s Democratizing Influence

The launch of ChatGPT put the capabilities of generative AI and LLMs into the hands of everyone, catalyzing a new phase of innovation, investment and development that some are calling “AI’s iPhone moment” because of its potential to revolutionize how we live and interact.

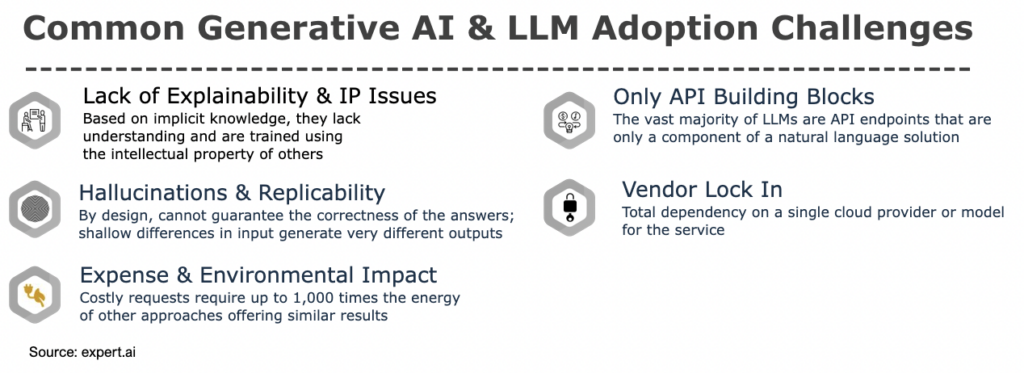

Today, insurers are experimenting with, and even using. these capabilities to accelerate processes, transform the customer journey and pull ahead of the competition. However, there are several aspects that make these new capabilities problematic for insurance processes.

As we’ve said before, AI and capabilities like generative summarization are here to stay in the insurance industry. But companies will need to carefully consider the options available to them to ensure that they’re building or working with a safe, secure solution.

These are just some of the questions to consider:

- Are you working with a model built for the insurance domain or a general knowledge model?

- Does it meet current standards for security and compliance?

- How is proprietary data used in training?

A Big Year in AI Regulation

These are just some of the reasons why the move for AI regulation has ramped up since November 2022, and especially in recent months. In 2023, we started to see more tangible global AI regulation take shape.

- In Europe, lawmakers have reached a deal on the first legal framework for AI, the EU Artificial Intelligence Act. The AI Act establishes a set of rules that govern the development of AI technology, places transparency requirements on certain types of AI systems/models depending on their risk category, and sets penalties for non compliance.

- As part of the UK’s AI Safety Summit held in November 2023, 28 governments signed the Bletchley Declaration, establishing a shared agreement on the need to cooperate on evaluating the risks of AI.

- In the US, the White House issued its Executive Order on AI that “will require more transparency from AI companies about how their models work. It will establish a raft of new standards, most notably for labeling AI-generated content.” The order advances the voluntary requirements for AI policy set in August 2023, including the blueprint for an AI Bill of Rights and an Executive Order directing agencies to combat algorithmic discrimination.

- For the insurance industry, the Innovation, Cybersecurity and Technology Committee of the National Association of Insurance Commissioners (NAIC) voted to adopt a Model Bulletin on the Use of Artificial Intelligence Systems by Insurers to “ensure the responsible deployment of AI in the insurance industry.” It addresses the potential for inaccuracies, bias and data risks, and serves to foster a uniform approach among state regulators for insurance.

- A group of insurtechs have launched the Ethical AI in Insurance Consortium to collaborate on industry standards for fairness and transparency in the use of AI.

- The state of Colorado is taking action to regulate the use of AI big data in insurance. It may become one of the first states to regulate how insurers can use big data and AI-powered predictive models to determine risk for underwriting. The proposed Algorithm and Predictive Model Governance Regulation, currently in draft version, “explains oversight and risk management requirements that insurers would need to comply with, including obligations around documentation, accountability and transparency.”

- In January 2024, the state of New York issued guidance for how state-authorized insurers use external consumer data and information sources (ECDIS) and/or AI systems. The proposed insurance circular letter clarifies existing regulation and outlines the state’s expectations for insurers regarding fairness, governance, transparency and auditing.

Responsible AI for Insurers

The imperative is clear: The best way forward is to design and build in responsible AI principles now.

Here’s a checklist to help you get started based on our Green Glass approach to Responsible AI. Let’s dive deeper into the 4 principles for responsible AI that insurers must consider when choosing their AI approach.

Sustainable

AI systems should be effective and less energy intensive.

The massive computing requirements of LLMs and their impact on energy and water consumption are well documented. This creates an additional challenge for ESG reporting and internal sustainability goals, especially given the prediction by Gartner that 25% of CIOs will see their personal compensation linked to their sustainable technology impact in 2024.

The fact is, bigger isn’t always better, and smaller, insurance domain-specific and less compute-intensive models can be extremely effective without the financial and sustainability costs of LLMs.

Considerations:

- Is there an existing model to use?

- Could a coordinated AI approach prevent duplicate project silos?

- Is there a simpler way to solve the problem that does not use Generative AI or LLMs?

Transparent

AI systems should be trusted and provide outcomes that are easily explainable and accountable.

Companies using AI for decision-making will need to provide explanations for the training data and model processes to comply with regulations. This includes clarifying how decisions are made for specific individuals or inputs.

“Inaccurate or biased algorithms can be problematic when they’re populating a social media feed, but things get much more serious when they’re deciding whether or not to give out a loan or deny an insurance claim.” – Usama Fayyad, Executive Director, Institute for Experiential AI at Northeastern University in Forbes

It’s also important for end users. Given the democratization of AI thanks to ChatGPT, end users who have little to no understanding of the workings of AI and LLMs may assume that the output is accurate and unbiased. That’s why verifiability, in addition to transparency and explainability, will be essential for any AI you’re using, but especially for generative AI.

Considerations:

- Can you trace where/how AI output came from?

- Are biased outputs identifiable?

- Will your AI approach meet future regulatory scrutiny?

Practical

AI systems should solve real-world problems, be ‘fit for purpose’ and provide tangible value to users.

The real capability that insurers (and any business) need is to understand how to apply this technology in a way that will drive real value. It’s less about diving right in and training an enormously complex language model and much more about where you apply it. As with any tech investment, the key benchmark to be applied is: how is it going to make a difference in terms of making money, saving money, improving the customer experience and improving our competitive positioning?

As we like to say, rush to understand the problems you’re trying to solve and choose the right tool for that problem rather than finding a problem for a certain tool to solve.

For insurance companies, a key element of the problems being solved concerns language. With that in mind, where are the language data flows really important in your business? Which use cases are actually viable? Then, get operational: how can we make this safe and effective?

Considerations:

- Do you have a process that prioritizes AI solutions based on their ability to solve real-world problems?

- Can you avoid experiments that never make it into production?

- Are there more sustainable AI approaches (e.g., rules-driven) that could be used as an alternative to LLMs?

Human-centered

Decision makers need data and approaches that they can trust and a human in the loop is necessary for both.

Humans are the primary creators and interpreters for the knowledge that we have. Therefore, it makes sense to include humans in the loop for any AI project, especially when it comes to understanding language and intent in a technical process where the expertise stored in a human brain is key to validating the correctness or truthfulness of the output of an LLM.

It’s worth emphasizing that we’re talking about full circle: humans need to be involved on the front end to vet inputs, as well as on the back end to review outputs for bias, security and accuracy. And a human-in-the-loop approach must be integrated into your overall strategy and design requirements, not tacked on at the end. Internally, this will be crucial for the level of buy-in and trust needed to ensure success at the level of adoption and for the ongoing success of operations as you fine tune and scale.

Considerations:

- How does AI capture the subject and process expertise of your experts?

- Can a user intervene to fix/prevent misleading, biased or wrong outputs?

The Path Forward for Insurance AI

Responsible AI adoption in the insurance industry is not only attainable but also imperative. By adhering to the principles of sustainability, transparency, practicality and human-centeredness, insurers can leverage AI’s transformative potential while ensuring ethical and responsible use. The path forward demands a comprehensive and conscientious approach to AI integration that benefits both businesses and society at large.

The expert.ai Enterprise Language Model for Insurance (ELMI) helps insurers deploy capabilities like Generative Summarization, Zero-Shot Extraction and Generative Q&A safely and cost effectively. Unlike general purpose LLMs, ELMI is:

- Trained for Insurance

- Cost Optimized

- Secure & Compliant

- Cloud Agnostic

If you’re interested in expanding your knowledge, take the opportunity to learn more about ELMI.