A recent Fortune article opened with a question: Is A.I. the start of the “4th industrial revolution” or another dot-com bubble? (Their answer: It could be both.)

Since the launch of ChatGPT in November 2022, numerous high-profile investments and mainstream accessibility has driven AI solutions—in the form of generative AI and large language models (LLMs)—into front page news. If you’re an AI vendor, it’s the topic of conversation at every client meeting, every media interview and every conference session. If you’re an enterprise, your CEO is asking your teams to understand if and how you can and should be using them.

The sense of this being a game-changing technology—at least it has put the spotlight on what AI can achieve—has many CEOs and CIOs trying to figure out how it might play in their business ASAP. This excitement and momentum, even some degree of FOMO, is driving some companies to jump in headfirst. After all, a little FOMO is understandable when you hear that “73% of C-suite executives are actively using generative AI at their organizations.”

As an IBM report highlighted, CEOs are feeling the pressure to speed toward adoption, even if they’re not exactly sure how: two out of three CEOs are using generative AI without a clear view of how it will impact their workforce, and this is influencing further investment and decision making.

Whether you are going in headfirst, playing wait and see or are somewhere in between, it’s likely that someone in your company is already experimenting with ChatGPT or one of the many commercial LLMs available. But when it comes to knowing how to move ahead safely while capturing the competitive potential that such a technology could offer—and this is a question we’re asked often—it’s good to take a “first principles” approach to balance strategy and execution.

The Starting Point: Language is Data

The starting point for how businesses need to think about this new AI capability? Language is data. The technology that is driving this new wave of transformation is artificial intelligence, which is able to emulate some of the capabilities that were previously thought of as being uniquely reserved for human beings. This is not new.

What is new, and what has exploded, is the ability to exchange and create information using language—enabled by ChatGPT.

Why is this the starting point? Because language is everywhere in your business. It’s in the documents you create (the presentations, reports, research), it’s in your CRMs, it’s the direct communications you have with customers, it’s email. If you’re an insurance company, language is the content that populates your claims or risk assessment processes. If you’re a pharmaceutical company, it’s the information that drives drug discovery and clinical trial processes.

Language is data, and any company should be thinking about how it drives the decisions, cost, revenue and risk in your business.

With that being said, let’s look at a simple framework for how you can approach this technology.

Understand the Capabilities of Generative AI and LLMs

Generative AI and large language models are absolutely something that you should be paying attention to. But rather than running to adopt, rush to fully understand the capabilities and limitations of the technology.

Let’s start with some definitions. Generative AI generates content. GPT is a type of large language model on which these models are trained, based on a set of data. How they work is similar to the text prediction feature we see at work in certain email clients and on our phones. Rather than understanding what the words mean, it uses a linguistic technique known as word embedding to predict which word might come next. So, it’s not able to understand facts—it just identifies text patterns.

The way they are able to be so convincing is down to the massive amounts of data that they are trained on. Consider that the initial model ChatGPT was trained on included more than 1 million datasets from a wide variety of sources. This is equivalent to around 300 hundred years’ worth of language.

With this in mind, here are some things they do well:

- Ability to generate natural language text: LLMs generate coherent and fluent natural language text, which has many applications for content creation, such as chatbots and text generation for writing tasks

- Ability to generate images, video and code

- High accuracy in selected NLP tasks, such as sentiment analysis, summarization and machine translation

Understand the Limitations and Risks of LLMs

To understand the risks and limitations of LLMs in business, it’s important to recognize that certain features are inherent because of how they are built and trained:

- They lack logic or intelligent thought

- They lack human-like reasoning skills

- Answers and responses cannot be explained

- What they “know” depends on the data they were trained on

- They are energy-intensive and compute-intensive: i.e., expensive to run

These features make the technology useful for generating new ideas, which is great for generating first drafts or even for generating code. However, it leads to many now well-publicized and discussed risks.

Since ChatGPT was first released, companies like OpenAI have added some human-supervised training (reinforcement learning with human feedback) to current iterations of GPT. However, significant risks around bias, personal data protection and copyright remain. Pending the outcome of certain legal cases and regulation around the world, their use could eventually become a real compliance issue.

At the end of the day, having a “ground truth” in models that have been trained on billions of parameters of data is always going to be difficult. Having guardrails or other controls in place to ensure that the output is correct is one way to approach this. Still, this can be resource intensive, and it doesn’t eliminate the risks.

For example, the U.S. Consumer Financial Protection Bureau recently warned banks that AI-enabled chatbots would be held accountable for bad advice. Essentially, the black box nature of any machine learning model means that retraining will be difficult. For large language models, the scale of the data makes this infinitely more time consuming and expensive: “To put this investment in context, AI21 Labs estimated that Google spent approximately $10 million for training BERT and OpenAI spent $12 million on a single training run for GPT-3.2 (Note that it takes multiple rounds of training for a successful LLM.”

Focus on What Matters for Your Business

Don’t confuse success with just having generative AI.

As we like to say for any business challenge, focus on the problem that you need to solve and then find the right tool for the job. As we mentioned above, the starting point in this context is language. Show us any process or workflow that contains complex language at any volume that is valuable to you.

For example, if you have 50 or more people working routinely on a language-intensive process, this is probably valuable for your organization. Here, domain understanding is absolutely critical, and you have to be able to understand any AI technology in the context of the domains that matter to you.

Industries like insurance and pharma and life sciences have some very standardized text-based workflows where you want to capture information in an accurate and consistent way. These are both industries that contain a lot of intellectual property and personally identifiable information—things that require significant privacy and security controls, even regulatory controls.

A large language model that can also tell you how to plan your vacation to Portugal might not be the best use of resources if your domain of focus is insurance. Instead, you want to be able to dive deep into all the areas that are related to your customers, risk reports, legacy policies, etc. in your domain.

So, if we look back at the risks above, a LLM is not likely the entire solution, but a language model of some kind (perhaps smaller, more specific) could be part of the solution.

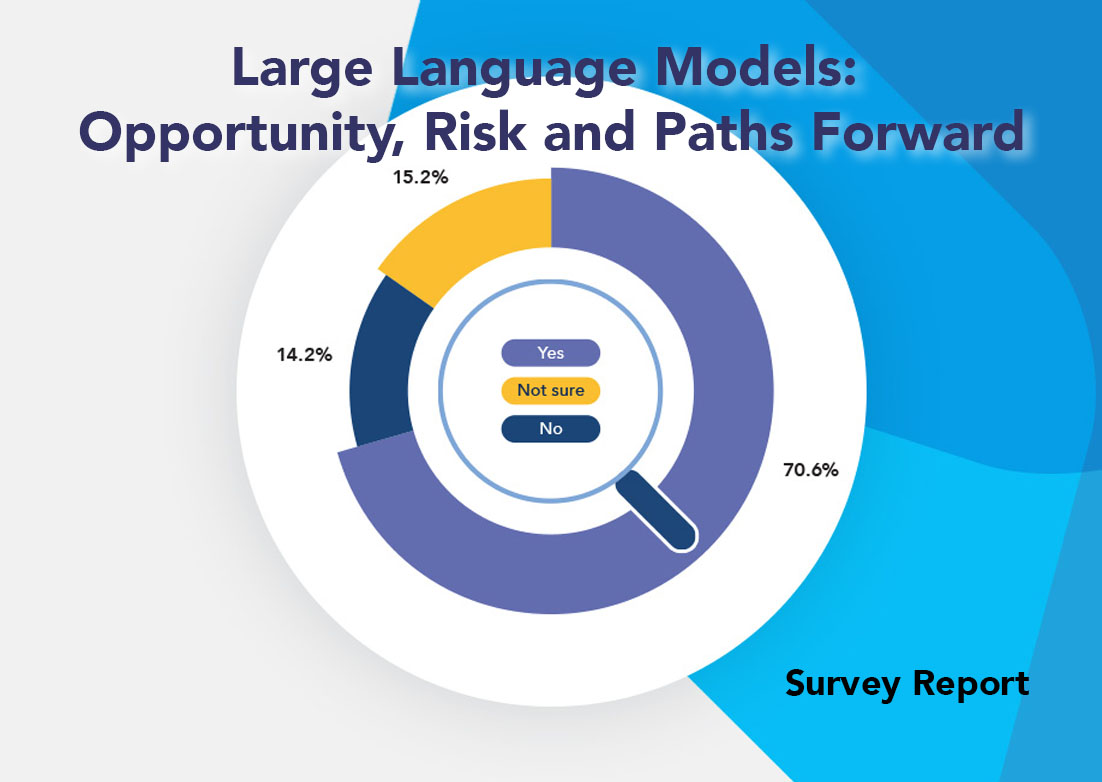

Just as we have seen incredible growth in ChatGPT users over a relatively short period of time, another dynamic that is happening is the minification of LLMs—but in much smaller models—in the open-source community. This is in keeping with the findings of our own LLM survey last quarter: companies are interested in building their own domain-specific models where they have control over things like the data used to train it and privacy.

AI Solutions: Leveraging Partnerships and Expertise for Success

Today, the thinking around these technologies and their continued development are evolving very quickly. Lots of money is being spent, and business leaders in every industry are thinking about competitive advantage. Task forces are being created to help executives understand what they can and should do around generative AI and LLMs in the business.

This is often hampered by a lack of internal skills to evaluate or even implement these technologies. The IBM report mentioned above found that only 29% of teams feel their organization already has the in-house expertise to adopt AI, and one executive said that “Acquiring digital experts is one of our biggest challenges…”

When dealing with language, you must handle its various forms, including ingestion, exploration, pre- and post-processing, cost optimization, accuracy validation, explainability, and enterprise governance. Additionally, you should consider the potential risks associated with language data leaving your organization’s boundaries.

All of these messy realities are not solved with just a large language model. Instead, you need end-to-end, integrated workflows that allow you to go from test to value. You need models that are relevant for your domain(s).

More than ever, companies need experts to help them do their best, at scale.

At expert.ai, our platform offers a repeatable way for you to handle the complexity of language to deliver value.

Through our experience in working with enterprise workflows in a variety of industries, we know that no single approach alone is good enough to solve every language problem. That’s why we combine symbolic AI with machine learning and LLMs to fit the best approach for each step of model development.

We can help you navigate the world of LLMs and generative AI to understand what the options are and what makes sense for your business.

For an example of what you can do with a domain-specific model, explore these top insurance use cases for AI.